Art exists at the intersection of AI and creativity. Artists are exploring the limitations and possibilities of technology by reversely utilizing techniques like ‘data poisoning.’ Data poisoning involves deliberately introducing incorrect information into AI model training data to manipulate the model’s behavior or outputs. Through this, artists critique AI’s reliability and autonomy while emphasizing the underlying human element.

There have been real cases where spam filter data was manipulated to reduce filtering performance. In the autonomous vehicle field, datasets were altered to make AI misidentify certain road signs, affecting safety. Additionally, there are cases where facial recognition software data was manipulated to create racial disparities in recognition performance. These are important studies that raise awareness about AI fairness and ethics.

One example of data poisoning is the work of Mario Klingemann. He conducted experiments generating artworks by intentionally inputting biased data during AI learning through machine learning and data manipulation. This made viewers contemplate the creative boundaries of technology and how human intention influences technological outputs. His work proves that AI cannot implement creativity without human intervention and poses new questions to audiences about the interaction between art and technology.

Klingemann’s works primarily fall into the realms of ‘network art’ and ‘AI art,’ exploring how AI systems produce distorted results through data poisoning. These works offer deep reflection not just on technical aspects but on how AI influences human creativity.

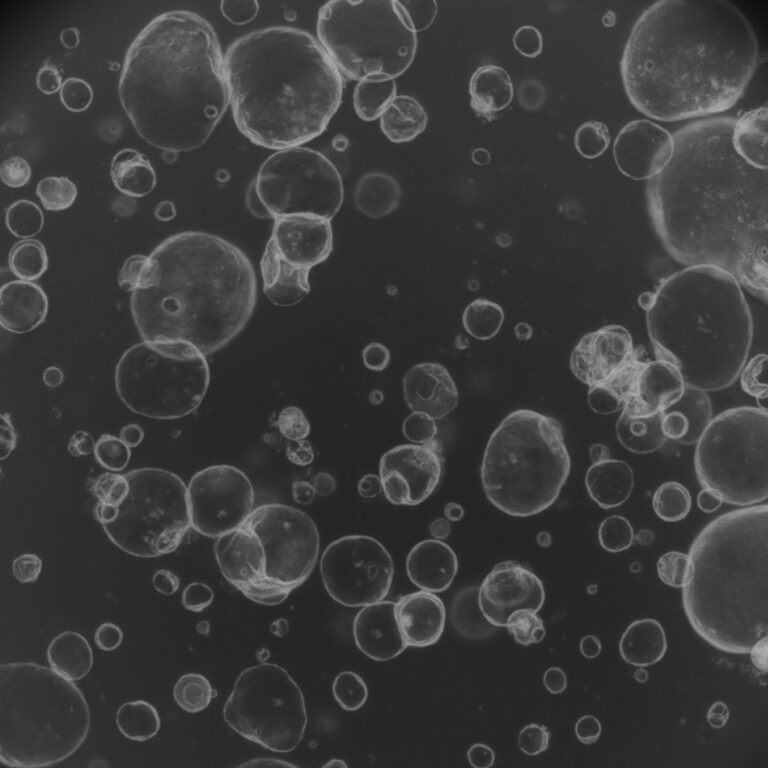

Data poisoning is also connected to the automation and malfunction of medical AI, including drug development. Recent research has experimentally verified security threats to medical data, showing that data poisoning significantly impacts neural network-based diagnostic models. In particular, there have been reports of models trained on medical images with incorrect tags failing to accurately diagnose the presence of cancer or disease. To prevent this, it’s necessary to design AI algorithms and models resistant to data poisoning that can identify attacks and minimize damage. Additionally, encryption and authentication procedures during data management and transmission can reduce the possibility of poisoning.